AI Code Generation: Context Compression

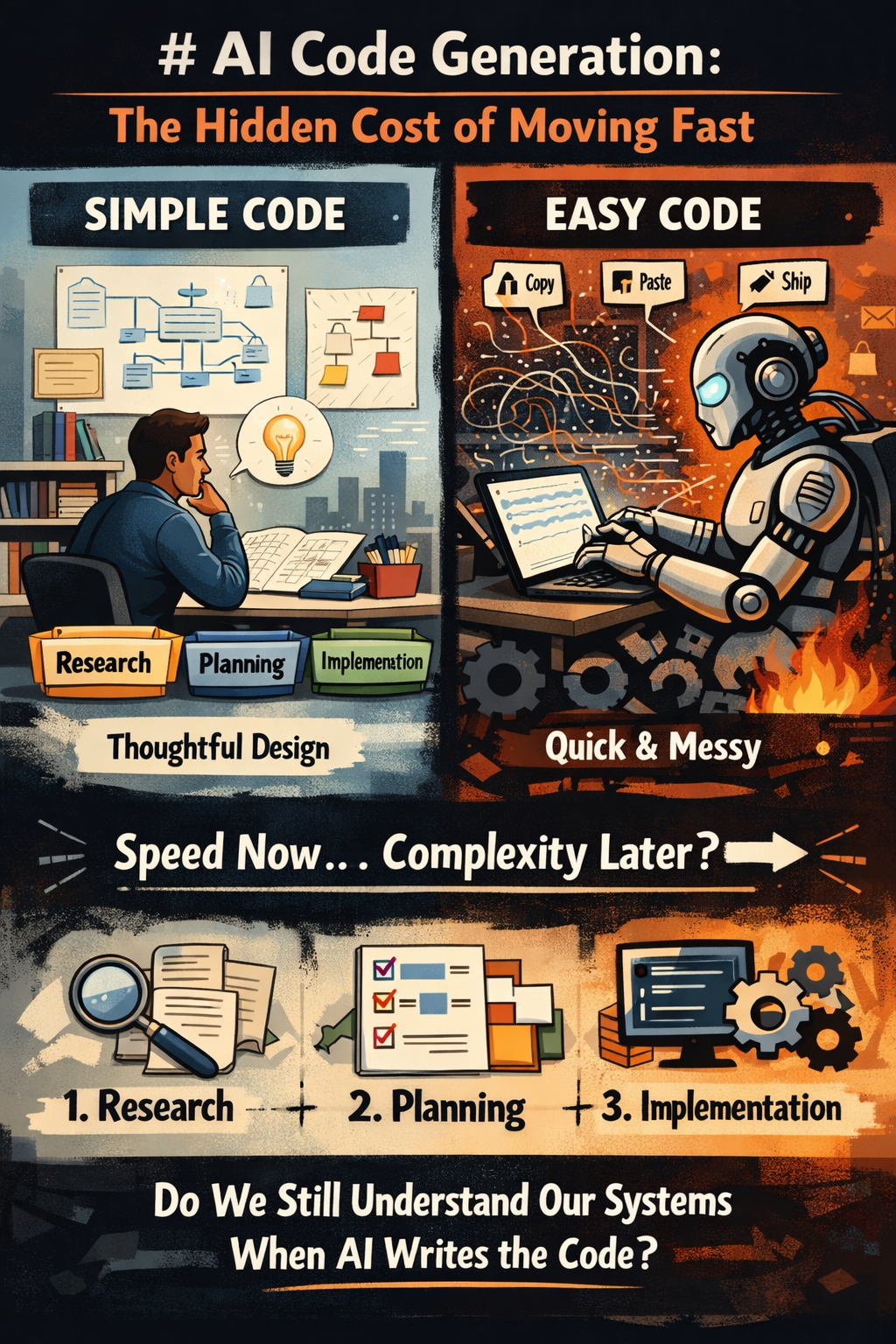

I recently watched a video by Jake Nations that fundamentally shifted how I think about AI-assisted development. The central question he poses is deceptively straightforward: sure, AI can generate code as fast as we can type prompts—but are we generating simple code or just easy code?

Simple vs. Easy: Not the Same Thing

Jake draws a crucial distinction here that resonates deeply with anyone who's inherited a nightmare codebase.

Simple means Single responsibility. Lack of entanglement. It's about the inherent lack of complexity in the design itself.

Easy means Copy, paste, ship. Install a package and move on. It's about convenience and speed of implementation.

Here's the rub: simplicity requires thought, design, and deliberate untangling. Easy is copying from Stack Overflow and installing dependencies without a second thought.

When we optimize for easy, we get speed now and complexity later. We've all been there.

Context Compression: A Three-Step System

To counter this tendency, Jake introduces what he calls context compression—sometimes referred to as context engineering. It's a three-step system designed to create simple (not easy) software systems. Systems that are well-designed and can actually survive production, meaning they're not hopelessly tangled and can be understood by humans.

The Fundamental Problem

- AI doesn't hold the entire context of your system

- AI treats existing code as patterns to preserve, which means it will happily replicate your existing mess at scale

The three steps are: Research, Planning, and Implementation.

Step 1: Research

Feed everything relevant upfront. I mean everything:

- Requirements documents

- Architecture diagrams

- Runbooks

- Design documents

Bring as much context as you can possibly gather, then use an AI agent to analyze the codebase. Run this analysis multiple times if needed.

The output should be a single comprehensive research document that captures:

- What exists

- How things connect to each other

- What your proposed change will affect

The human checkpoint is non-negotiable. This is where you validate the AI's analysis against reality. It's the highest-leverage moment in the entire process. Catch errors here, prevent disasters later.

Step 2: Planning

Based on that research document, create a detailed plan with actual code structures. We're talking:

- Exact flow of data

- Which files need modification

- What functions to create

- How components will interact

The plan needs to be so thorough that any developer on your team could follow it. This is where architectural decisions get made—by humans, not hallucinating language models.

Step 3: Implementation

Now that you have a clear specification from planning, you shouldn't expect a complexity spiral. You can hand off the implementation to a background agent and shift your attention elsewhere. During code review, you're simply verifying that the implementation matches your plan.

The Real Point

We're not using AI to think for us. We're using AI to accelerate the mechanical parts. Research and planning becomes faster, but thinking and judgment? Those remain with us.

This three-phase approach isn't a silver bullet. The goal is to produce code that we actually understand—code that we can reason about when things inevitably go sideways at 5 AM.

There's a massive difference between:

- A system that goes to production vs. a system that survives production

- A system that functions today vs. a system that can be changed by someone else six months from now

Skip the thinking—skip research and planning—and you lose the ability to recognize problems before they become production incidents.

Jake closes with a question that I think every development team needs to grapple with:

Do we still understand our systems when AI is writing most of the code?

I don't have a clean answer yet. But I know that treating AI as a shortcut to skip understanding is a debt we'll pay with interest.

Further Resources: